Brain-computer musical interfacing (BCMI) research is still in its infancy in our department, but due to the efforts of the author, some small strides in that direction are being made. In order to allow the consumer-grade Neurosky EEG devices to communicate with Max/MSP environment, docent Oliver Frei built a Java external for Max/MSP. This external was in turn used by the author to create three pieces in order to test the feasibility of his ideas and the degree of control which could be achieved. All pieces were successfully performed several times.

Photo by: Mika Thiele

On a more personal note: I was always fascinated by the possibility to infer thought processes simply by reading out the miniscule electrical signals emannating fron the brain, all hidden in a see of noise. As a practicing musician, thought, I have a somewhat pragmatical approach to musical interfaces – any new interface I would chose to use has to allow me to express myself in a way that others don’t, or at least make that way of expession easier. And let us be frank: for healthy individuals the BCI is still more of a novelty, then a reliable tool, or even a convinient one. Bearing all that in mind, and due to some very personal experiences as well, I’ve came to the conclusion that the area where the BCMI would shine is healthcare. Working with patients who are otherwise unable to express themselves, such as comatose patients or patients suffering from locked-in syndrome, and trying to provide them with some sort of relief, giving them back some sense of control, offering entertainment at the very least – that was my ultimate goal for this project.

Mind Meld I

“Mind Meld I” was the first piece I wrote, more as a proof of concept and a software instrument then a real musical piece. It is was written for two performers using Neurosky EEG devices. As far as the score goes, the performers were given a set of simple instructions:

Relax for a few minutes with your eyes closed, almost as if asleep. Then “wake up” slowly, remember that you are on stage. Open your eyes and allow yourself to be nervous if you feel like it. Think of what your partner is doing and try to follow the music. Stay like that for a minute. Then close your eyes and “go back to sleep”.

To strengthen the form further, I have limited the piece to 5 minutes, with the 30 second fade in at start and fade out at the end. A lot of time was spent on developing a mapping strategy, since, as Eduardo Miranda stated: “In algorithmic composition, the mapping of gestures to sounds may be considered the composition itself.”[1]

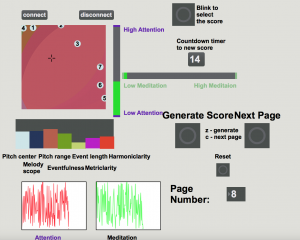

The few-to-many mapping was done using the Max’s Nodes object. In the very early stages I considered using neural networks, but having in mind my final goal for the project, I’ve come to the conclusion that they would have been the wrong path. The network would need to be trained by each patient individually, requiring getting feedback from the user in order to evaluate the network’s performance, which is an impossibility for comatose or locked-in patients. Therefore, I chose the Nodes object, with the hopes that, in a clinical trial, the patients themselves would learn through bio-feedback to best control their thought patterns in order to express their musical ideas.

The piece relies heavily on the control of two states of mind measured thought “meditation” and “attention” parameters provided by the Neurosky devices themselves. People I tested the system with all showed different amounts of control of these parameters, but most importantly, a level of control was always present at least to some extent.[2] These parameters were used to set up the control parameters of a generative music process provided by DJster.

For the “Mind Meld I”, the events generated by DJster were controlling a multi-timbral and microtonal sampler originally created for the networked multimedia performance environment Quintet.net and now part of the MaxScore package. To help distinguish the players and provide a clear sense of dialogue, one player was controlling the sampled accordion sounds and the other the electronic sounds generated by sonifying the atomic spectra of a sulfur atom.

Projected visualisation showed the “attention” and “meditation” parameters of performers to the audience. The parameters were mapped to the X and Y coordinated of two light shapes moving across the projection, and the blink detection was used to introduce the disruption effect in the third quarter of the piece.

One of the biggest challenges for the performers lies in manipulating their own thought processes in order to guide DJster in the direction of their choosing, in a meta-cognitive game of sorts. It is our hope that approaches such as these will allow the people otherwise unable to physically perform on an instrument (patients with locked-in syndrome etc.) to create and enjoy in a music of their own.

Mind Meld II

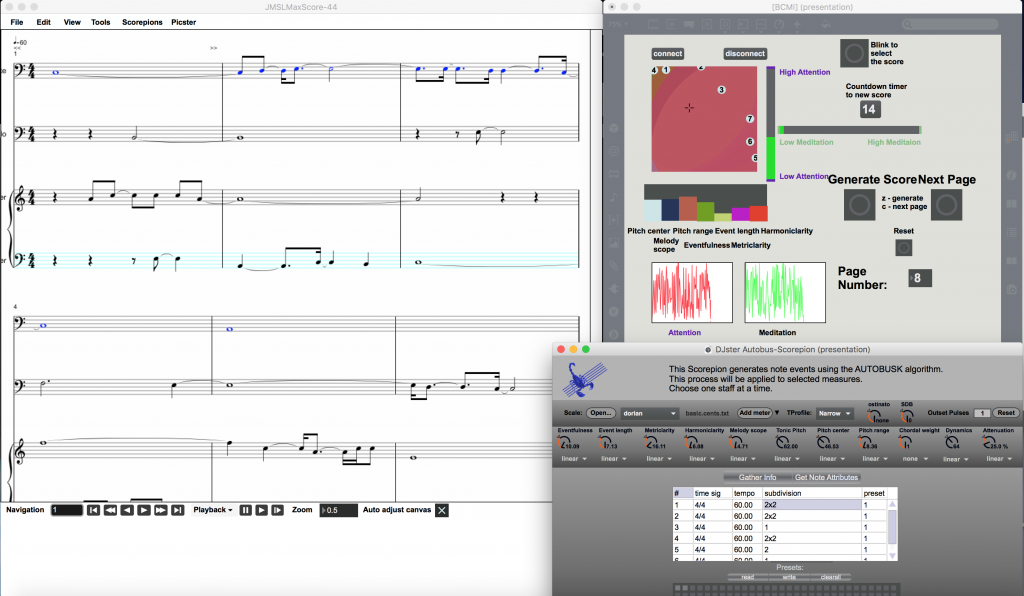

“Mind Meld II” was a continuation of a work started in “Mind Meld I”, and it was based on the concept of real-time score generation and performance. It was created during a real-time composition workshop at ZM4 Hamburg in 2015 and was intended to generate a score for a trio of performers. The guiding idea was to create a system, which, when used in a hospital setting, would provide the patients the opportunity to work with live performers, giving the entire endeavor an additional social dimension, which should by no means be underestimated.

The basic technology developed for the “Mind Meld I” was here expanded and refined. Again working with the “attention” and “meditation” parameters and mapping these through Nodes object, the non-real time version of Djster was used, which generated a page of note text at a time. The events produced by DJster were then notated using MaxScore and projected for the performers and audience to see. Some parameters, such as scale, tempo, dynamic frame and, to an extent, rhythmus were precomposed, while the others were controlled through BCMI.

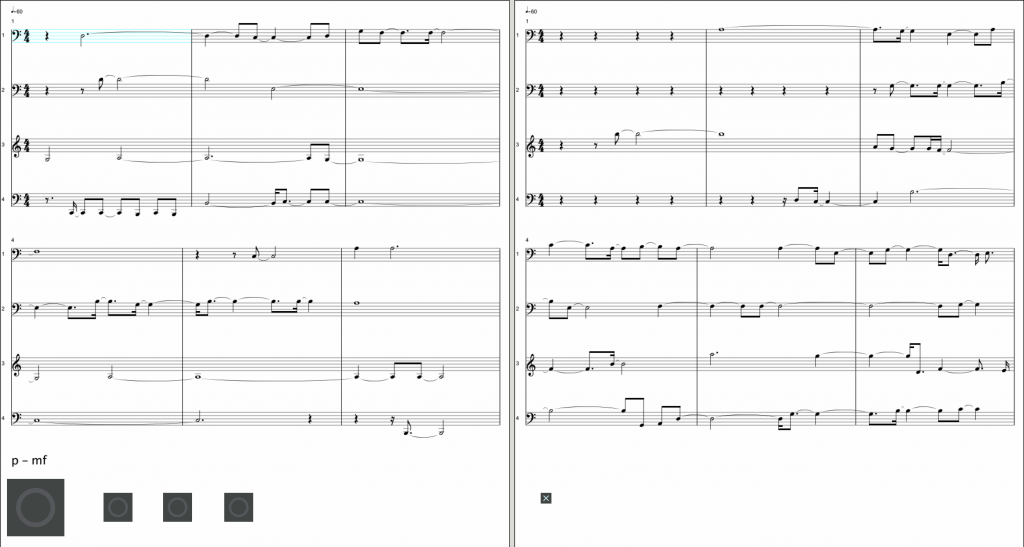

Unlike “Mind Meld I”, which was more conceptual, here I have tried to move more in the direction of something closer to a concert piece – the piece had 15 pages, totaling approximately 6 minutes of play time. When played by a less experienced trios, there was an option of using an on-screen metronome, but, ideally, this would not be necessary. My personal preferences, as well as the fact that playing prima vista in an ensemble is a quite difficult task, led to the scale of choice being a D Dorian modus. The types of rhythmical forms that were possible to occur were generated following a probability table based on the overall structure of the piece. Each of the pages had a set dynamics, marking the frame in which the musicians were free to move. The musicians would always see two pages of the score, the first one to be played and the second one as a preview of what was to come next.

The material for the piece was generated using the brain signals of an additional performer who was instructed to be still, with her eyes closed, and to mentally follow the flow of music. These instructions were meant to create a connection and a sort of feedback between the “composer” on stage, writing the music with her mind, and the musicians playing it. The progressively more complicated rhythmus, faster movements and wider dynamic ambitus, all of these inherent in the system, would influence the attention of the “composer” and in turn, reshape the tonal and melodic content of the piece.

Acceptance

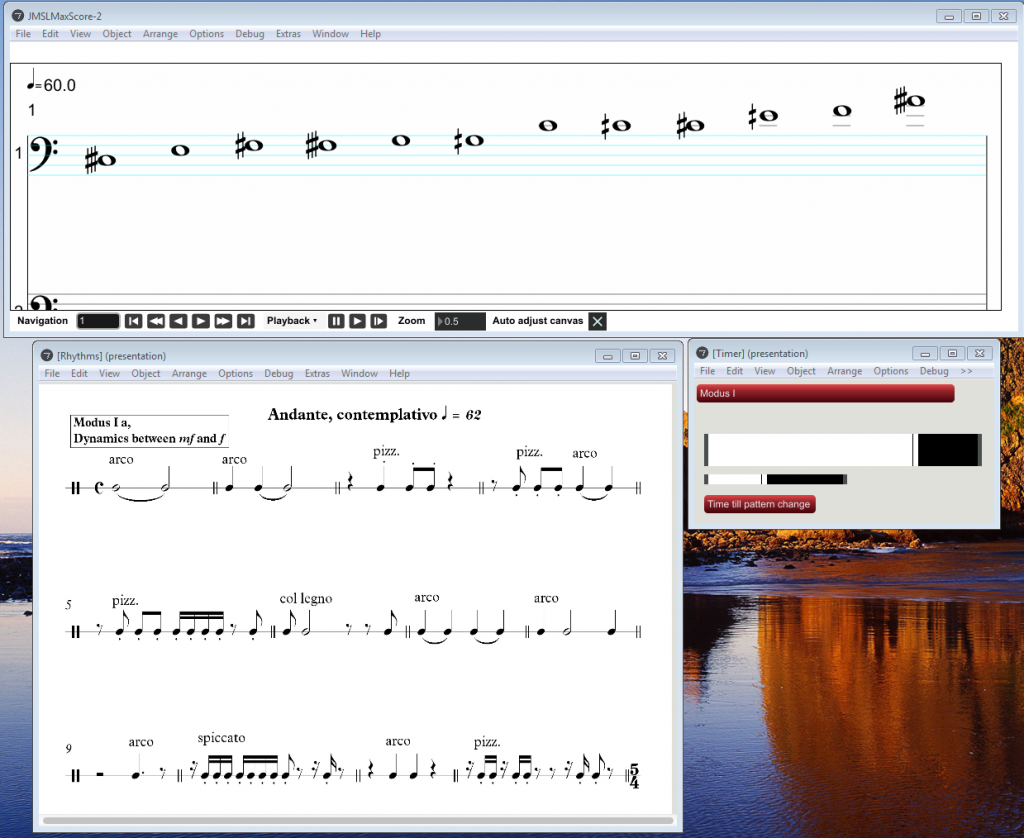

The latest piece featuring BCMI technology was dubbed “Acceptance” and featured as the final part of a “Metanoia” multimedia project, performed in July 2017 in Hamburg. It was a piece for double bass solo, where the pitch material was generated in real time using the FFT analysis of raw EEG data of the performer. The question I tried to answer was “to what extend is the pitch material important for the musical expression?”

Rooted in the dramaturgy of the entire project, the piece works closely with the ideas of feedback and introspection. Formally, the piece consists of three larger parts, each subdivided into two rhythmic modi. The performer was equipped with the Neurosky device, and his brain patterns as well as his facial electromyographic activity were sampled into a buffer, which was then analyzed in order to create a “scale” – a different set of pitches for each part of the composition. Using spectra2midi~ abstraction developed by Benedict Carrey the data was put through FFT analysis and the output was sent to the performer on stage. Precomposed rhythmic patterns were organized into modi – the player was free to choose any combination of the patterns presented on his screen, within the time allotted, and to use any tones belonging to the current scale. Similarly to “Mind Meld II”, the rhythmic patterns and the dynamic frames were the main guiding elements. The piece lasts for approximately 8 minutes and the timer can, at the performer’s discretion, be ignored in the last modus – all other modi have predefined durations.

The first EEG readings were taken before the piece started, and the performer was instructed to keep as still as possible during that time. As the piece progressed, both the physical movements (due to the dynamic and the articulation) and the mental effort of combining the pitches and the rhythmic patterns contained in a single modus were changing. This in turn influenced the pitch material generated, creating a feedback in which the performer was playing to the sound of his own mind.

Where to next?

Our experience with these three projects shed light on some or the particularities of working with BCMIs and led us to believe that both further work and new approaches are needed if we wish to attempt clinical trials. Most prominent problem we’ve encountered had to do with the devices themselves and their Bluetooth connection. While the Neurosky devices performed well in a studio, on stage they’ve proven to be quite unreliable – the distances when working in a concert setting are much larger and the interferences from other devices, such as cell phones of the audience, are always a big unknown. Battery life also came into play, further complicating the issue. Native Neurosky driver (ThinkGear Connector) only works with Windows, which also presents a problem – we always prefer the software solutions which are platform independent, or at least support both Windows and macOS. All in all, we can’t recommend the devices for the live performance. As a tool to be used in a hospital setting, they do have advantages due to low weight, low price, extreme ease of usage and setting up, and the fact that their faults don’t play as big of a role as in a concert. We do hope, however, to test Muse and Emotiv devices in the future.

From a conceptual standpoint, we realized that both “attention” and “meditation” parameters, although easiest to use and control, were calculated by the manufacturer using algorithms based on the working of a healthy brain. With our ultimate goal of working with comatose or locked-in patients in mind, we have great doubts that under such circumstances these same algorithms would perform as intended. Therefore we hope to develop our own analytical strategies based on the raw EEG data provided by the sensors. The choices of both DJster as a generative engine and MaxScore as a vehicle for notation seem to be the right ones and we are looking forwards to reaching the stage in our research when we may try and at least in some small way improve the lives of patients.

[1] E. R. Miranda and M. Wanderley. New Digital Musical Instruments: Control and Interaction Beyond the Keyboard (Computer Music and Digital Audio Series, 21). A-R Editions, Inc., 1st edition, Middleton, WI, 2006.

[2] For me personally, influencing the “Attention” parameter was relatively reliable by simply doing math or reading, while the “Meditation” parameter continued to elude me.